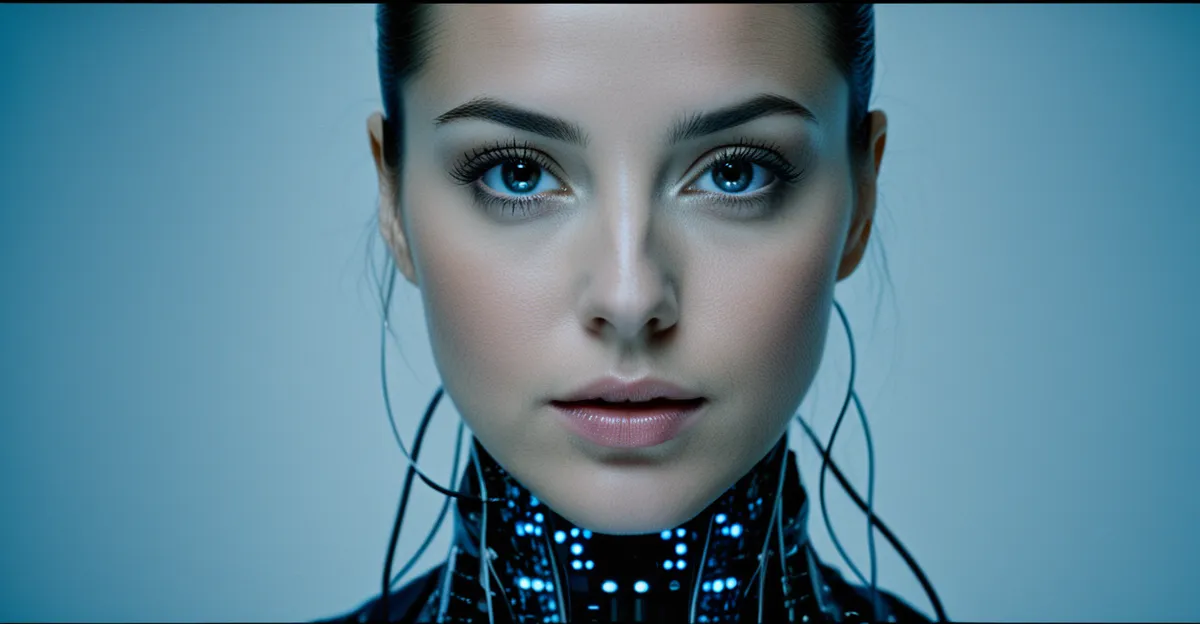

Overview of AI Risks in the UK Technology Sector

The AI risks UK landscape encompasses various challenges arising from the rapid deployment of artificial intelligence technologies. In the UK technology sector, these risks extend beyond technical glitches to impact citizens, businesses, and public institutions. Among the major concerns are data privacy breaches, algorithmic biases, and cyber threats, which collectively pose significant dangers to trust and safety.

Citizens may face infringements on personal privacy and unfair treatment, while businesses risk reputational damage, financial loss, and legal liabilities due to AI malfunctions or unethical use. Institutions must also guard against compromised decision-making processes influenced by flawed AI outputs. The artificial intelligence dangers are particularly critical because AI systems often operate with limited transparency, making it harder to detect errors or misuse early.

Also read : How Can the UK Embrace Emerging Technologies to Foster Sustainable Growth?

Addressing these risks is vital for ensuring the UK’s technological advancement aligns with societal values and legal standards. Proactive risk management within the UK technology sector fosters public confidence and encourages innovation that benefits all stakeholders. This comprehensive understanding ensures that AI deployment does not inadvertently create harm, emphasizing the importance of strategic oversight in this evolving field.

Data Privacy and Security Concerns

Safeguarding personal data in AI applications

In the same genre : What Are the Potential Challenges UK Tech Faces in the Next Decade?

The rise of AI data privacy challenges in the UK technology sector demands urgent attention. AI systems process vast amounts of personal information, increasing risks of data misuse, unauthorized access, and breaches. This situation elevates cybersecurity risks uniquely tied to AI, including sophisticated attacks exploiting automated decision-making or data aggregation capabilities.

UK-specific laws such as the General Data Protection Regulation (GDPR) and the Data Protection Act 2018 (DPA 2018) provide crucial frameworks for managing these AI data privacy risks. They enforce strict requirements on consent, data minimization, and transparency, aiming to prevent misuse and enhance individuals’ control over their data. Organizations deploying AI must comply with these standards to mitigate privacy violations.

Recent incidents involving AI-based systems have exposed vulnerabilities, highlighting the ongoing struggle to balance innovation with robust data protection. These breaches have affected citizens and institutions alike, underlining the importance of continuous monitoring and improvement of AI security measures. By addressing AI data privacy and cybersecurity risks proactively, the UK technology sector can foster safer, more trustworthy AI deployment outcomes.

Bias, Discrimination, and Fairness

Bias in AI systems remains a pressing AI bias UK challenge, particularly as algorithmic decision-making expands in sectors like recruitment and law enforcement. These AI-driven tools can unintentionally encode existing social prejudices, leading to unfair treatment that disproportionately affects protected groups and diverse communities. Discrimination risks arise when biased datasets or insufficiently vetted models generate skewed outcomes, undermining trust and equality.

Addressing algorithmic fairness is critical to combat these artificial intelligence dangers. For example, biased AI in hiring might exclude qualified candidates based on gender or ethnicity, while in policing, it could result in disproportionate surveillance or arrests. Such outcomes not only harm individuals but also threaten the ethical integrity of the UK technology sector.

Regulatory scrutiny focuses on ensuring transparency and fairness in AI deployment. UK authorities and standards bodies actively promote fairness initiatives, encouraging companies to audit algorithms rigorously and incorporate diverse perspectives during AI development. This multifaceted approach helps mitigate AI risks UK related to discrimination and fosters more equitable AI systems that better serve society’s needs.